¶ Kubernetes Environment Access

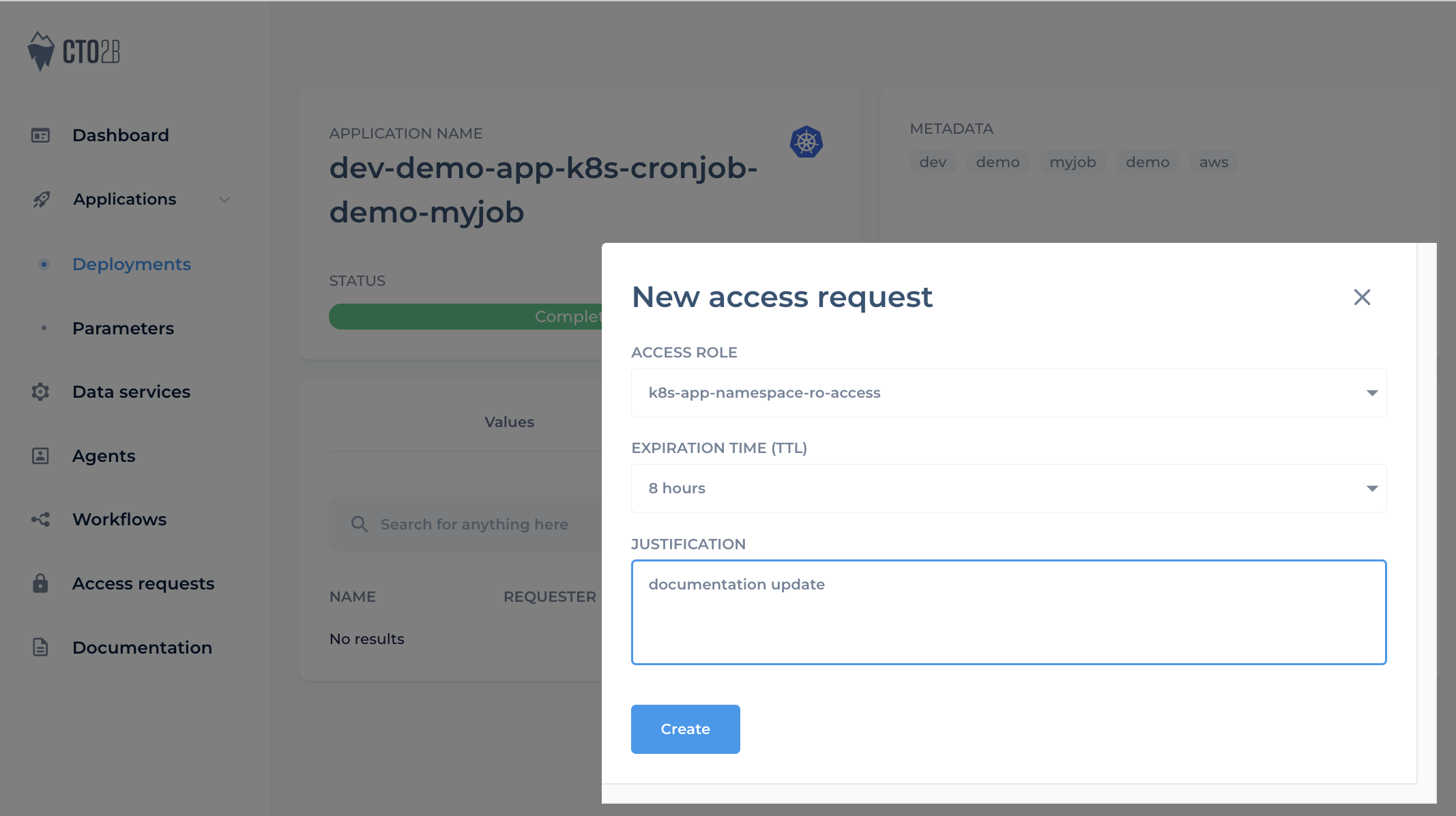

follow the same steps to request access like in DB Access just use k8s-app-namespace-ro-access role

- make sure your access request is approved.

- if you have

tshopen session you need logout othervise typetsh loginor if you 1st time logging in run

note below is required only if you login 1st time or you have logged out

tsh login --user [email protected] --proxy https://teleport.manage.cto2b.eu

list kube clusters and login to it.

$ tsh kube ls

Kube Cluster Name Labels Selected

----------------- ------------------------------------------------ --------

demo-dev-demo cloud=aws,environment=demo,stage=dev,tenant=demo

$ tsh kube login demo-dev-demo

Logged into Kubernetes cluster "demo-dev-demo". Try 'kubectl version' to test the connection.

¶ CronJobs

Note that in this example

demonamespace is used and some random job name. Pls use names applicable to your environment

list cronjobs

$ kubectl get cronjobs -A

NAMESPACE NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

demo dev-demo-app-k8s-cronjob-demo-myjob-j1 0 2 * * * False 0 <none> 62m

¶ Trigger Cronjob

by trigering cronjob manual it will create Job object in k8s and Job will create pod

$ kubectl create job --from=cronjob/dev-demo-app-k8s-cronjob-demo-myjob-j1 -n demo myjob-manual-001

- check status of our manual trigered job pods

kubectl get po -n demo |grep myjob

myjob-manual-001-0-krbx7 1/1 Running 0 34s

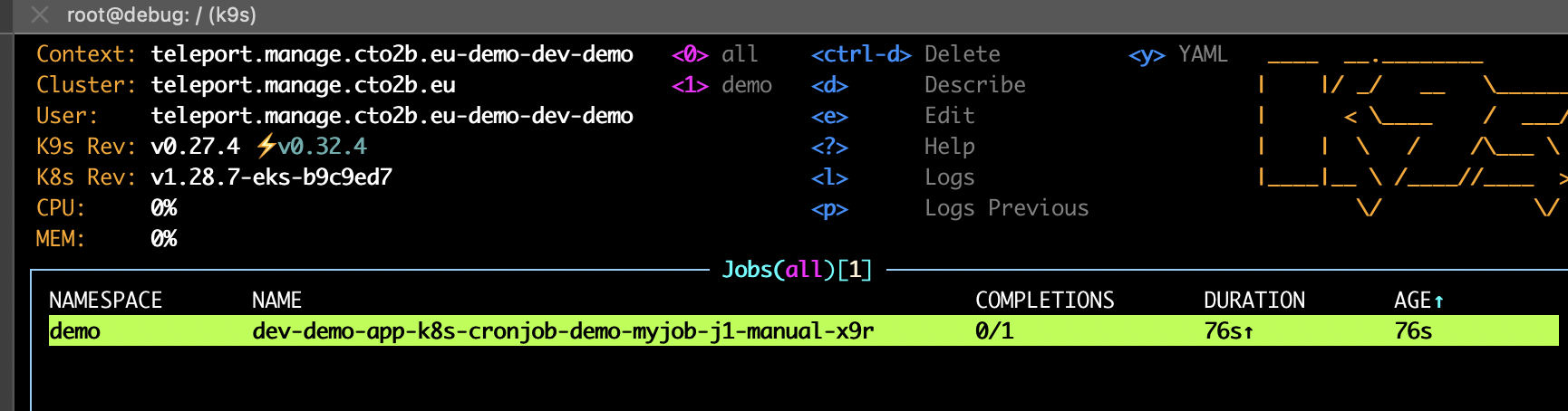

- check job status. In this exxample it's not completed yet as completion is

0from1

k get job -A

NAMESPACE NAME COMPLETIONS DURATION AGE

demo myjob-manual-001 0/1 5m31s 5m31s

¶ Delete Job

- by deleting job you will delete all associated pods

$ kubectl delete job myjob-manual-001 -n demo

job.batch "myjob-manual-001" deleted

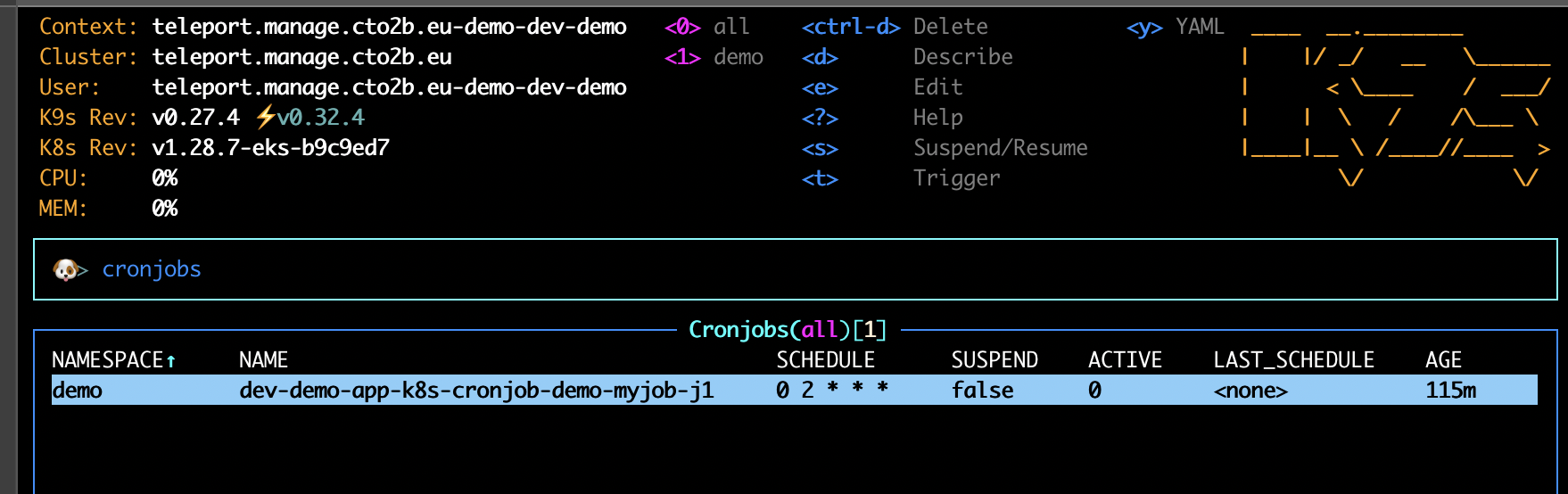

¶ Cronjob Operations in K9s

- get download k9s https://k9scli.io/topics/install/

- make sure you are in right context use

kubectx

$ kubectx

Switched to context "teleport.manage.cto2b.eu-demo-dev-demo".

- type

Shift + :and type cronjobsEnter - make sure is right namespace or pres

0to all

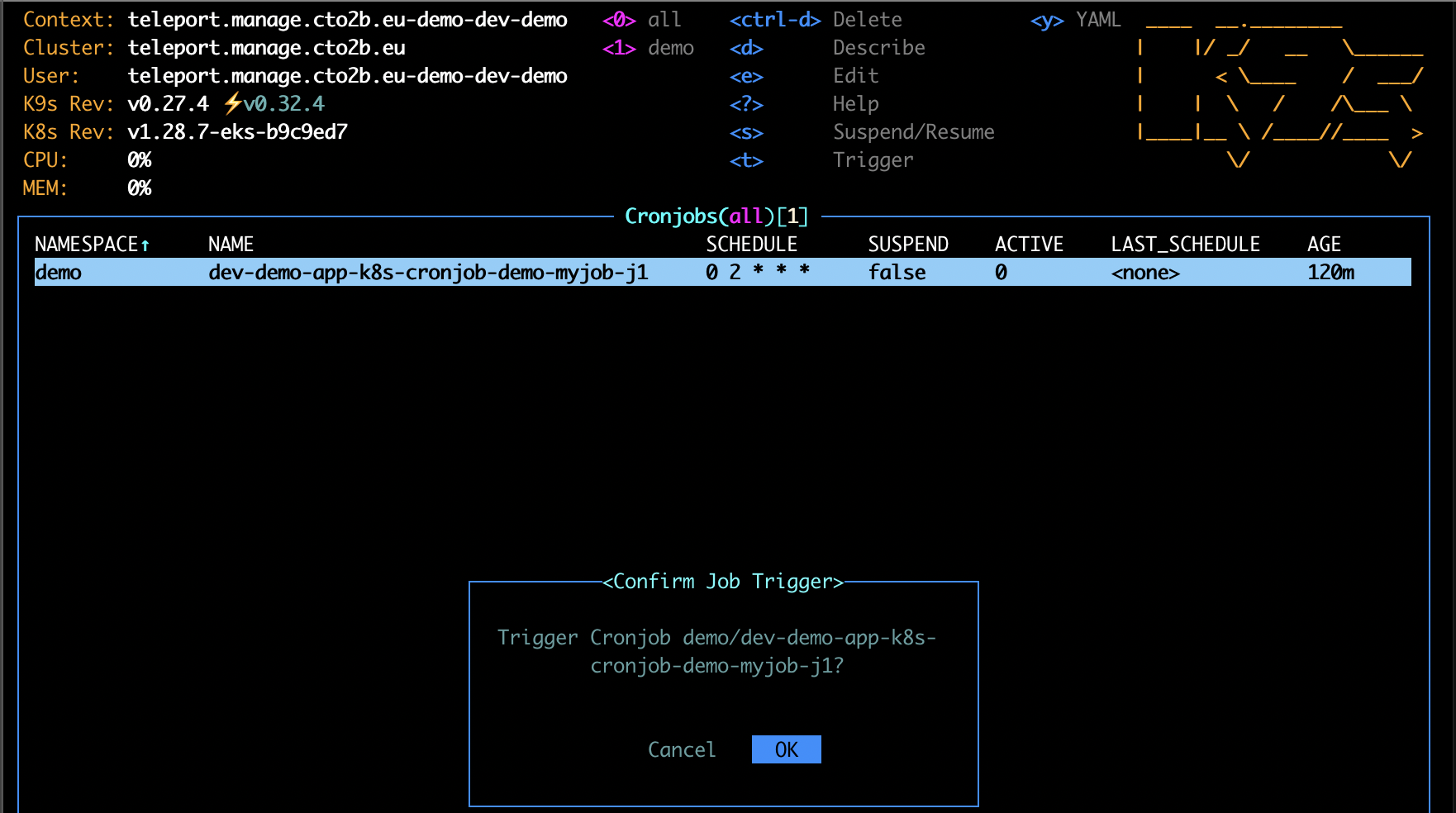

- press

tto trigger and OK

- to check pods or jobs use same keywords

typeShift + :and typepodsorjobsandEnter

To manipulate or delete folow keyword help menu visible on top of k9s

¶ Logs

- to check logs real time for running manual trigerred Job just press

lon pod in k9s

or run

$ kubectl get logs myjob-manual-001-0-krbx7 -n demo

¶ CronJob default values

- cronjob manifest

jobs:

- name: j1

suspend: false

activeDeadlineSeconds: 1200

securityContext:

runAsUser: 1000

runAsGroup: 1000

fsGroup: 2000

# optional env vars

env: # make the value as [] to not pass any env vars

- name: DEFAULT_DB_NAME

value: "test"

envFrom:

- secretRef:

name: secret_data

- configMapRef:

name: config_data

schedule: "* * * * *"

command: ["/usr/bin/dumb-init"]

args:

- --

- /opt/app/entrypoint.sh

- run

serviceAccount:

create: false

name: "busybox-serviceaccount"

resources:

limits:

cpu: 50m

memory: 256Mi

requests:

cpu: 50m

memory: 256Mi

backoffLimit: 2

jobFailureRules:

- action: FailJob

onPodConditions: null

onExitCodes:

containerName: j1

operator: In

values: [1,42]

- action: Ignore

onExitCodes: null

onPodConditions:

- status: 'False'

type: ContainersReady

ttlSecondsAfterFinished: 10

failedJobsHistoryLimit: 1

successfulJobsHistoryLimit: 3

concurrencyPolicy: Forbid

# if jobFailureRules are configured, restartPolicy is forcely set to Never, even if another value is provided

restartPolicy: Never

tolerations:

- effect: NoSchedule

operator: Exists

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: pool

operator: In

values:

- main

- spot